On the 12th, according to sources cited by the media, OpenAI, the leader in generative AI, is developing a new AI big model project called “Strawberry”, which is very mysterious.

What is OpenAI’s mysterious project ‘Strawberry’?

According to media reports, an internal document of OpenAI in May showed that the “Strawberry” project being developed by the internal team of OpenAI aims to enhance the reasoning ability of OpenAI’s model and its ability to deal with complex scientific and mathematical problems, so that large models can not only generate query answers, but also plan in advance, so as to browse the Internet independently and reliably and carry out “in-depth research” defined by OpenAI.

According to over a dozen artificial intelligence researchers, this is a feature that big language models have not yet been able to achieve.

It is obvious that OpenAI does not want to disclose detailed information about ‘Strawberry’ at this stage.

When asked about the details of “Strawberry,” a spokesperson for OpenAI just took a roundabout approach and said, “We hope that AI models can see and understand the world like humans. Continuously researching new AI capabilities is a common practice in the industry, as we all believe that AI’s reasoning ability will continue to improve over time

After all, even within OpenAI, the working principle of “Strawberry” is strictly confidential, and there is currently no news on the release time of “Strawberry”.

But some media have revealed that the predecessor of the “Strawberry” project was the Q * algorithm model, which can solve difficult scientific and mathematical problems. And mathematics is the foundation of the development of generative AI. If AI models master mathematical abilities, they will have stronger reasoning abilities, even comparable to human intelligence. And this is also something that current large language models cannot achieve.

Last year, Q * was first exposed in an internal letter from OpenAI, and CEO Ultraman was fired because of this Q * project.

Some OpenAI insiders have pointed out that Q * may be a breakthrough for OpenAI in its pursuit of General Artificial Intelligence (AGI), and its rapid development is shocking, raising concerns that AI’s rapid development may threaten human security. At a time when such concerns were spreading, Ultraman chose to accelerate the development and commercialization of the GPT series models without informing the board, which sparked dissatisfaction from the OpenAI board and led to their decision to kick him out.

Using ‘Strawberry’ to Improve the Reasoning Ability of Large Models

Although we cannot obtain detailed information about ‘Strawberry’, we can see from OpenAI’s recent clues that enhancing the reasoning ability of generative AI models is its next development focus.

OpenAI CEO Ultraman once emphasized that the key to AI development in the future will revolve around reasoning ability.

At an internal staff meeting this Tuesday, OpenAI presented a demonstration of a research project, stating that the project has human like reasoning abilities. An OpenAI spokesperson confirmed the internal meeting to the media, but refused to disclose the details of the meeting, so it cannot be determined whether the project being demonstrated is a ‘strawberry’.

But according to insiders, the “Strawberry” project includes a specialized “post training” method, in which the generative AI model has been pre trained on a large dataset and further adjusted to improve its performance on specific tasks. This is similar to the Self Thought Reasoner (STaR) method developed by Stanford University in 2022.

One of the creators of STaR, Professor Noah Goodman from Stanford University, has stated that STaR can enable AI models to “guide” themselves to higher levels of intelligence by repeatedly creating their own training data. In theory, it can be used to enable language models to achieve intelligence beyond that of humans.

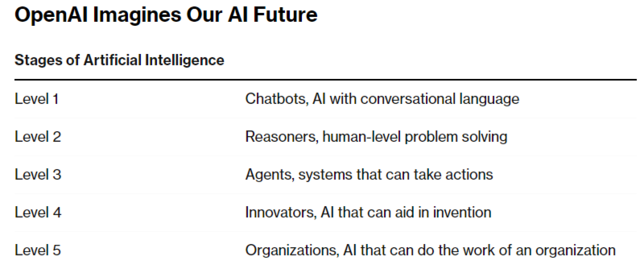

This is in line with OpenAI’s desire for ‘reasoning ability’. Moreover, OpenAI also released a five level roadmap for the future development of AI on the 11th:

According to OpenAI’s conjecture, AI will go through five stages in the future:

Level 1: chatbots, artificial intelligence with conversational language

Level 2: Reasoning, human level problem-solving

Level 3: Agent, a system that can take action

Level 4: Innovators, AI that can assist in invention

Level 5: Organizer, AI capable of completing organizational tasks

Based on the information currently collected, the “Strawberry” project is highly likely to be the key to helping OpenAI achieve Level 2 AI.

According to media reports, an OpenAI executive stated that AI models are currently at the first level, but it is expected to soon reach the second level, which is inference. OpenAI is currently working on achieving doctoral level intelligence on specific tasks, which is expected to be achieved within a year or a year and a half.

Another feature that OpenAI focuses on is improving the ability of large language models to perform Long Horizon Tasks (LHT), which requires the model to plan ahead and execute a series of tasks over a longer period of time.

According to insiders, in order to achieve this goal, OpenAI hopes that “Strawberry” can become a model for creating, training, and evaluating “deep research”, and use “Computer Using Agents” (CUA) to autonomously browse web pages and take action based on their findings.

If OpenAI succeeds, the “Strawberry” project is likely to redefine AI’s capabilities, enabling it to make significant scientific discoveries, develop new software applications, and autonomously perform complex tasks, taking humanity one step closer to AGI.

Leave a Reply